Raspberry Turk

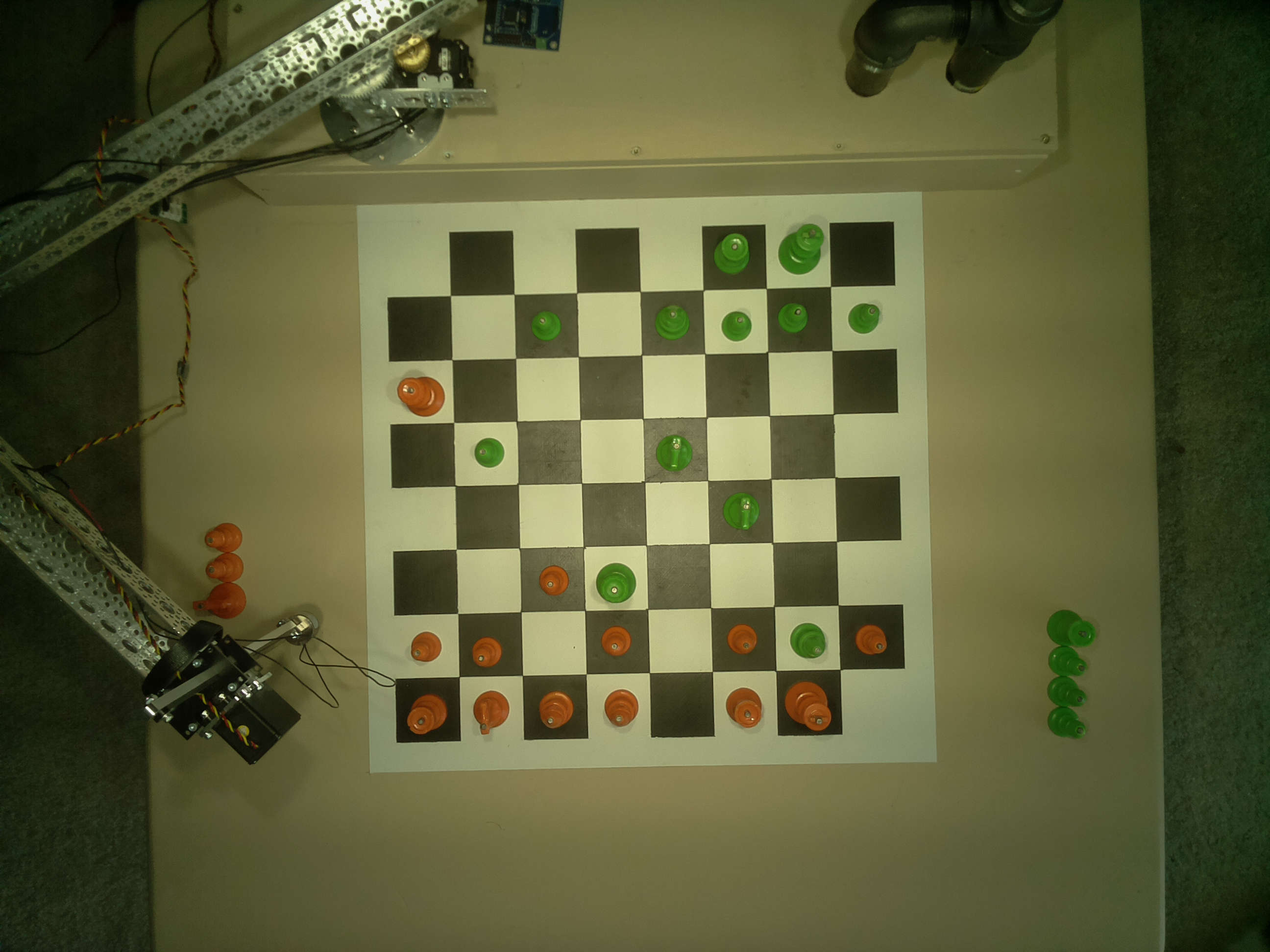

The Raspberry Turk is a robot that can play chess—it's entirely open source, based on Raspberry Pi, and inspired by the 18th century chess playing machine, the Mechanical Turk.

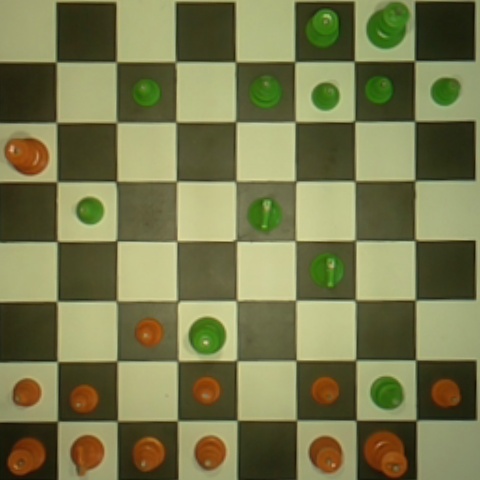

The Raspberry Turk uses a Raspberry Pi camera module and computer vision algorithms to determine which pieces are in which squares. In order to see the board properly, it needs to be calibrated first.

From chess_camera.py

def current_chessboard_frame(self):

_, frame = self._capture.read()

h, w = frame.shape[:2]

M = get_chessboard_perspective_transform()

bgr_img = cv2.warpPerspective(frame, M, (BOARD_SIZE,BOARD_SIZE))

img = cv2.cvtColor(bgr_img, cv2.COLOR_BGR2RGB)

flipped_img = cv2.flip(img, -1)

return ChessboardFrame(flipped_img)

In order to go from A to B, cv2.warpPerspective from OpenCV is used.

There is a small script used to find the correct transformation matrix. The full source can be found here.

Essentially it does the following:

cv2.findChessboardCornerscv2.getPerspectiveTransformIf the camera moves or the robot is deconstructed and reconstructed the perspective transformation matrix can be easily recalculated.

$ python -m raspberryturk.embedded.vision.chessboard_perspective_transform

2017-02-11 16:05:41,371 - __main__ - INFO - Begin camera position recalibration...

2017-02-11 16:05:41,371 - __main__ - INFO - Camera position recalibration successful.